For most, buttoning up a shirt is a simple task, one requiring little active thought as the mind is occupied by other tasks to get ready for the day. But for those who no longer have full use of their limbs — from stroke, spinal cord injury or another cause — the connection between brain and body that allows for this daily action is missing and, often, independence along with it.

As a young undergraduate mechanical engineering student at Tsinghua University in China, Xiaogang Hu was drawn to the assistive technologies that could help restore movement for these people. In a research group, he learned he had a knack for asking the right questions, too.

“I asked the principal investigator of the lab, ‘What is the major problem in assistive robots used for rehabilitation? What are we missing?’” said Hu, now the Dorothy Foehr Huck and J. Lloyd Huck Chair in Neurorehabilitation and an associate professor of mechanical engineering at Penn State. “The response was, ‘We don’t really understand well enough how biological systems work, and this knowledge gap blocks effective communications between biological systems and engineered assistive systems.’”

This answer inspired Hu to set out to solve the field’s overarching problem, while keeping the people who needed the solutions at the center of his work.

Improving hand dexterity, increasing independence

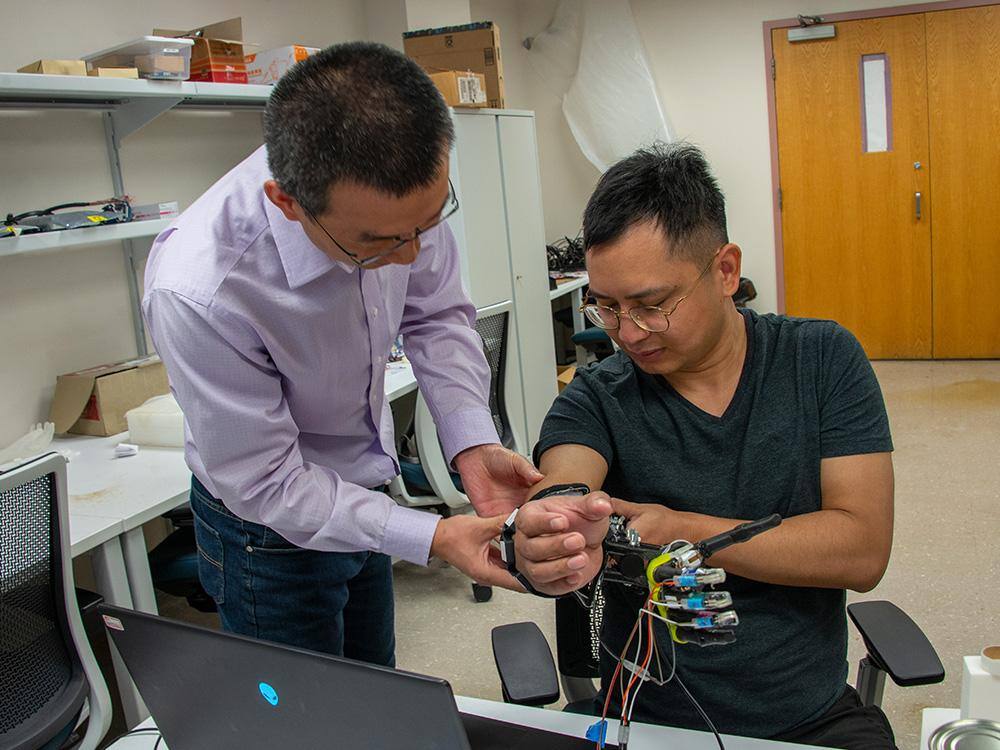

Hu’s research focuses on creating wearable assistive robotic systems for people with limited use of their limbs, especially their hands, with the goal of the user intuitively controlling the systems and devices on which they rely.

“We focus on developing assistive techniques in a way such that when the person controls those assistive devices, they would do it as if they are controlling their own biological limbs,” Hu said. “Interacting with assistive devices needs to be very natural. That’s one of the most critical factors that will determine whether the person will eventually use those systems or not. If the devices are difficult to use, people will just put them away.”

With that at the forefront of his research, Hu said he concentrates on how to predict what a user is trying to do through artificial intelligence (AI). The technology involves a wearable exoskeleton — for example, a glove with motors and cables — and electrodes that can be placed on the skin’s surface or implanted into the skin. The electrodes can pick up very weak electrical muscle signals, and their technique can then use different signal processing techniques such as AI and other types of computational assessments to figure out what the user intends to do. These signals can then be used to drive the exoskeletons.

“Our recent work is focused on the dexterity of the hand,” Hu said, explaining that allowing a person with disabilities to move individual fingers is very difficult and that most of the work in the field focuses on helping a hand open or close and do a two- or three-finger pinch. “But being able to move fingers individually really determines whether a person can live independently or not. If they don't have a good hand dexterity, they won't be able to perform daily tasks like combing their hair, preparing meals or interacting with their smartphones. We want to improve hand dexterity to help people live a more or less independent lifestyle.”

Hu added that one of his recent publications specifically addresses this issue.

“What can we do to make their lives better?”

After graduate school, Hu worked as a researcher at Northwestern University’s Shirley Ryan AbilityLab and then at the University of North Caroline Chapel Hill. He said that the time spent in rehabilitation hospitals — where he interacted daily with patients who were amputees or had strokes, amyotrophic lateral sclerosis or other neural conditions — reinforced the impact that this research has.

“We would ask the patients a lot of questions such as what really bothers them when they go home, what can we do to make their lives better,” he said. “We might see a person who on day one couldn't move their limb at all and then we would run a clinical trial with intensive therapy, and a few months later, they are able to pick up a cup by themselves. That’s huge.”

Hu recalled a specific stroke survivor enrolled in a study of his lab. As an engineer for an IT company, the person needed to type constantly during her job. After experiencing a stroke, however, she was unable to move her arm and could not return to work.

“We had a two-month clinical trial with high intensity training where we would stimulate her arm for two hours in a lab visit,” Hu said. “Through electrical stimulation, we got her muscle to do things like finger reflection and extension.”

On the first day, the participant could not move her hand at all.

“It was basically paralyzed,” Hu said. “Then, by the end of the two-month training, she was able to grab a small cup and move it from one location to another, and then pick up a pencil and draw a line. Essentially, she was able to regain some of her hand functions. She got very emotional in the last lab visit. Seeing that impact is the most meaningful aspect of our research.”

In Hu’s recent work, he and his team have further optimized the stimulation technique that could enhance the functional improvement of stroke survivors.

Pioneering new developments by mimicking biology

Hu’s next research steps include two main thrusts. The first is to have the AI algorithm that predicts the person’s intended movement to adapt seamlessly over time to the user’s changing behavior or habit.

“We want to have the algorithm slowly adapt with the person so that the algorithm prediction accuracy remains high,” Hu said.

The second thrust is to convey touch sensation such as the temperature, rigidity or other properties of an object held in a hand back to the brain.

“When people lack some of that touch sensation, they need to rely on visual information instead,” Hu said. “That the sense of touch is really critical when we use our hands and interact with objects. But assistive robots are not sending that information back to the brain via the nerves.”

Hu said that the nerves can be thought of as information pathways going back to the brain.

“Even if the hand is not working properly, the pathways are still there,” he said. “We can stimulate that nerve or the pathways to generate artificial signals that will trick the brain into feeling that sensation, so that the skin will feel pulsing or pressure sensations. It’s really just mimicking how the biological systems work.”

The approach of mimicking biology is one that Hu and his team have been taking in their work consistently, and one that they hope will propel the research forward as they continue to ask — and answer — the questions Hu first posed as an undergrad: “What is the major problem in the field? What are we missing?”