Picking up a cup of coffee, flipping a light switch or grabbing a door handle don’t require much apparent thought. But behind the curtain, the brain performs feats to coordinate these seemingly simple hand-to-object motions.

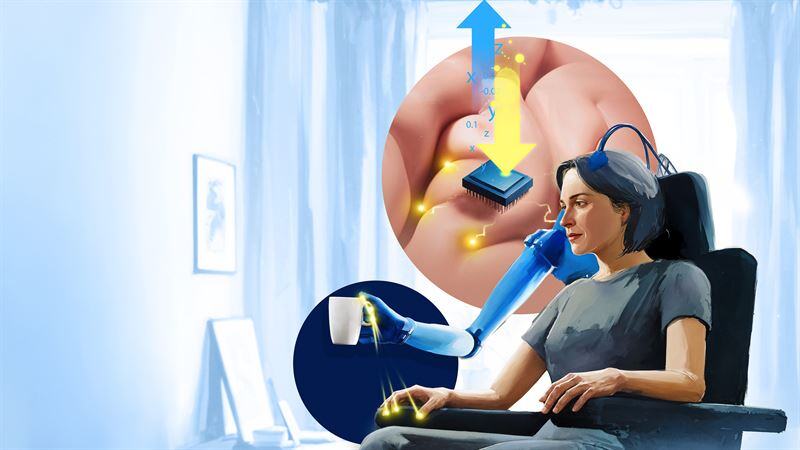

Using functional MRI brain imaging, or fMRI, University of Oregon researchers have unraveled some of the neural circuitry behind these kinds of actions. Their insights, described in a paper published in the journal eNeuro, can potentially be used to improve the design of brain-computer interface technologies, including brain-controlled prosthetic arms that aim to restore movement in people who have lost it.

“While there are already robotic arms or exoskeletons that take signals from brain activity to mimic the motions of a human arm, they haven’t quite met the gracefulness of how our arms actually move,” said study lead author Alejandra Harris Caceres, an undergraduate senior majoring in neuroscience and human physiology. “We’re hoping to figure out how and when the brain integrates different kinds of sensory information to help make this technology better for patients.”

Michelle Marneweck (left) is an assistant professor in human physiology, studying the neural processes that allow humans to dexterously interact with their environment. Alejandra Harris Caceres is a fourth-year undergraduate student majoring in neuroscience and human physiology. (Photos courtesy of Michelle Marneweck)

For the brain to plan even a simple, goal-directed action, like reaching out and grabbing a cup of coffee on a table, it needs to make lots of calculations, including the direction and distance of one’s hand to the object. To get even more accurate, the brain uses multiple “reference frames” to make calculations from multiple perspectives — for example, from the hand to the cup versus from the eyes to the cup.

In this study, the researchers wanted to understand how the brain combines those different bits of sensory information to plan and generate a goal-directed action. While that has been investigated extensively in nonhuman primates such as monkeys, this is the first evidence in humans of neural representations of direction and distance in multiple reference frames during reach planning, said study senior author Michelle Marneweck, an assistant professor in human physiology at the UO’s College of Arts and Sciences.

“Just like how we use reference frames for navigation, like moving through the woods or driving through fog, we also use it to make the most elementary, everyday actions, such as reaching for a bottle of water,” Marneweck said. “Having a system rich in redundancies allows us to flexibly switch between reference frames, using the most reliable one in the moment, and be good at goal-directed actions, no matter what the environment throws at us.”

How the brain plans a reach

As part of the study, human subjects laid flat on a screening table inside an fMRI machine. Over their lap was a table with an electronic task board of lights and buttons. Because the participants couldn’t sit up during the scan, they wore a helmet-like setup with a mirror attached, allowing them to see and interact with the button-pushing tablet.

The fMRI scan measured blood flow in the brain to highlight active areas when subjects were prompted to reach for a button and when they pressed it. Analyzing the neural activity patterns across key sensory and motor areas, the results showed the human brain prepares for reaching actions by encoding the target direction first, then the distance. The researchers also found that direction and distance are coordinated in multiple, rather than single, reference frames.

That can help improve the design of tools like bionic arms that use brain signals, Harris Caceres said. Neural interface technology that considers the serial processing of direction into distance and incorporates multiple reference frames — and the multitude of regions encoding this information — could add an extra layer of sophistication to the modeling, she suggested.

“It is a really exciting time for brain-computer interface technology, which has advanced rapidly in recent years with the integration of sensory information. One of the overarching goals of our lab is to further optimize these technologies, by figuring out when, where and how the brain integrates sensory information for skilled actions. This experiment was a step in that direction.”